The annual Healthcare Information and Management Systems Society conference (HIMSS11) in Orlando was very interesting and there were a few notable trends and developments. Interestingly Circadence was the only WAN Optimization vendor exhibiting at the conference and after speaking with a number of CIOs, CTOs, and Network managers I could see why. There seem to be a very small number of proven solutions for the emerging markets in medical, including: teleradiology, telepathology, and EMR. Multiple factors seem to be in play explaining this including price, complexity, and technology limitations. Circadence has a terrific set of technologies available that meet the needs of the medical community and were very well received at the event. Major trends:

Cloud, Cloud, Cloud

Of the 600+ exhibitors I doubt there was more than a handful that didn't talk about "the cloud" or try to position themselves "in the cloud". What does this mean? Well, that's the question of the day. "Cloud" has become a catch all for folks trying to latch on to this latest trend. The reality is that Cloud computing is nothing new and has gone by many names over the last couple of decades...ASP, Server Based Computing, Thin Client Computing, Remote Resourced, etc. Perhaps where the current trend differentiates is in the breadth of offerings and the extremely low cost of entry. Relatively cheap bandwidth, truly cheap computing power, and the explosion of capability in hardware and software virtualization has created an environment where anyone can stand up a data serving capability quickly and economically...to a point. For all the terrific things that "cloud" services provide there are still some limitations worth exploring further:

1. Bandwidth: Bandwidth does have limitations and when thousands of users are aggregated in a data center that bandwidth must be shared across multiple users, technologies, and requirements. Many organizations move their data and computing into the cloud only to find that their transfer rates are lower, and sometimes much lower, than they were before clouding their data. This makes sense though as one of the trade offs of cloud computing is that you go from your own dedicated, defined path communications and connections to a shared environment. It is critical that any organization using the cloud for data and computing tasks understands clearly what SLA they are getting and how it will be monitored and enforced.

2. Accessibility: How will your users and/or customers be accessing the content and capability that is now hosted in a central store? The move to the cloud creates very large hub and spoke connections where the cloud is the hub and everything connected to it are spokes. As direct control of the connections between the end users and the content is lost, some mechanism must be put in place to assure the performance and reliability of the user connection.

3. Mobility: One of the best reasons to move to cloud computing and cloud based data repositories is their ability to facilitate mobile computing. Mobile can be anything from a remote office, a home office, a laptop, to a smart phone. By having near universal-access entry points, public clouds facilitate mobile usage. The question becomes how do you assure, control, monitor and secure these remote connections?

4. Control: How do organizations control and manage the connections of their users and customers to their data and computing assets? It will be important for any organization interested in cloud computing to understand the limitations inherent in hosted data and computing and have a solid plan for control.

Mobile

The second major trend from the conference concerned "Mobile": mobile computing, mobile access, mobile storage. Mobile can mean a lot of different things to an organization, these were the most important or most mentioned:

1. Anytime/Anywhere Access: Organizations are interested in making their content and capabilities available to their users and customers from wherever those users may be, whenever they need access. This includes being able to effectively, securely connect from an undefined location back to the enterprise (whether on-campus or in a cloud). How will connections be secured; how will performance and reliability be assured? What about compliance?

2. Any Device Access: This was a very popular topic throughout the event...how to enable users and customers to access the data and applications they need form whatever device they happen to be using. The range of devices in question ranged from high-end workstations to smart phones. How to support multiple devices must incorporate both: supporting the required applications resident on the platform; and, supporting a controlled and assured connection from the device to the content (hub on-campus or in the cloud).

Circadence provides solutions that can help form a strong foundation for organizations looking at Cloud Computing or Mobile Access for their users and customers. Regardless of the direction organizations take however, it will be critical for them to understand the limitations and mitigation requirements, in addition to the many valuable capabilities that Cloud and Mobile bring.

Monday, February 28, 2011

Thursday, February 17, 2011

Mobile - Part 2

When talking about network performance and acceleration there can be a tendency to get stuck in a rut talking about downloading and forgetting that uploading is equally as important. Any acceleration or optimization solution should take both the downlink and uplink into consideration and provide equivalent performance impact. Today I'm working remotely from the RSA conference in San Francisco and writing this from a WiFi connection at a local Starbucks. Between the conference and the rain it's safe to say that inside Starbucks is popular at the moment. The network is as congested as the line for coffee. While I am doing rather simple tasks from a network perspective, including updating this blog, I've also had to download and upload large files of different types. This has included Power Point presentations, JPEGS, Excel spreadsheets, Project files, and applications. Most of the connections have been SSL encrypted links back to our corporate enterprise. Using our MVO optimization technology I'm seeing gains of up to 7x in my UPLOADS to the enterprise over SSL. This is making a huge difference for me and is keeping me productive while in less than ideal circumstances.

Tuesday, February 15, 2011

What is "Mobile" in the enterprise?

Today I've had the opportunity to experience mobile connectivity to the enterprise in several different ways, using several different platforms, and multiple network types. The experience gave me a first hand look at some of the challenges we still face as well as just how valuable remote connectivity can be. I was also able to experience firsthand the benefits of utilizing WAN Optimization integrated into the fabric of the enterprise versus simply installing appliances at a couple of endpoints.

Some of the highlights, part 1

I am constantly connected to the enterprise in a basic way using my Android smart phone. I use the Touchdown application to connect seamlessly with our corporate Exchange server for mail, contacts, calendar items and more. Additionally though I've installed applications for remote access and remote desktop access linking me back to my desktop systems, allowing both control and file access. This has saved me more than once by being able to get immediate access to large content and send it directly from my desktop. Our MVO product for mobile devices assures network connectivity for mobile applications.

Next...laptops, power usage, and media.

Some of the highlights, part 1

I am constantly connected to the enterprise in a basic way using my Android smart phone. I use the Touchdown application to connect seamlessly with our corporate Exchange server for mail, contacts, calendar items and more. Additionally though I've installed applications for remote access and remote desktop access linking me back to my desktop systems, allowing both control and file access. This has saved me more than once by being able to get immediate access to large content and send it directly from my desktop. Our MVO product for mobile devices assures network connectivity for mobile applications.

Next...laptops, power usage, and media.

Friday, February 11, 2011

End-Point network control; protecting SLAs

The value of providing optimized and accelerated connection from the cloud or content network edge to the end-user device is clear and well known. What about dealing with interruptions, congestion, and other effects that occur within the end-users local environment? Circadence has developed unique technology within our MVO optimization with the ability to maintain Session Persistence at the end-user. This patented technology is called Link Resilience™and it maintains application sessions and mitigates momentary outages and network disruptions, whether the issue is with the CDN’s network, the local network provider utilized by the end-user (e.g. their ISP), or a problem the end-user may be having within their LAN environment. When an interruption does occur, the local application session is maintained by MVO until the connection is restored. When the problem has been resolved, the application automatically reconnects and picks up where it left off; preventing further delays, ensuring content is delivered, and minimizing the interruption to the end user. MVO with Link Resilience provides CDN’s with the technology they need to ensure that content is delivered reliably, consistently, and completely to their end-users as efficiently as possible.

Most applications are designed to assume that the network link connecting the application client to the application server will be persistent and reliable. That assumption works fairly well when the network link is perfectly behaved and performing consistently.

Applications connected over WAN links are affected significantly by the reliability and resiliency of the network link. Without MVO the application will fail, sometimes with unpredictable results, when the WAN link is disrupted. In many cases file transfers will need to start over from the beginning, databases lock up, and transactions break.

Circadence MVO provides a highly efficient, consistent, and high performance connection over the WAN. This enables application to achieve peak performance and reliability.

MVO includes unique and patented Link Resilience, which enables applications to remain active and alive during WAN outages. MVO can be configured to terminate the local connection immediately, or keep the application connection alive for an administratively configured period of time.

Most applications are designed to assume that the network link connecting the application client to the application server will be persistent and reliable. That assumption works fairly well when the network link is perfectly behaved and performing consistently.

Applications connected over WAN links are affected significantly by the reliability and resiliency of the network link. Without MVO the application will fail, sometimes with unpredictable results, when the WAN link is disrupted. In many cases file transfers will need to start over from the beginning, databases lock up, and transactions break.

Circadence MVO provides a highly efficient, consistent, and high performance connection over the WAN. This enables application to achieve peak performance and reliability.

MVO includes unique and patented Link Resilience, which enables applications to remain active and alive during WAN outages. MVO can be configured to terminate the local connection immediately, or keep the application connection alive for an administratively configured period of time.

Monday, February 7, 2011

Accessing the Cloud...451Group CloudScape report highlight

the 451Group and Tier1 Research CloudScape Report from Cloud Codex, January 2011 says: "...there is no better mechanism for accessing cloud services than use of the public Internet, and it is likely that many clouds will, one way or another, end up being accessible publicly – even those that start out as purely private. This trend introduces additional security complexity to cloud offerings – as well as additional questions about availability – which can now include carrier networks along the path from end user to the cloud." Access the report at: Cloud Codex Report 2011

Circadence technologies including MVO and MVS can help small, medium, and large enterprises address these challanges and make the most of Cloud Computing.

Circadence MVO offers the most flexible SaaS deployment options in the industry supporting fast, efficient deployment into public and private clouds. Circadence MVO dynamically adjusts performance based on real-time conditions to increase data throughput and reduce lost and resent packets. MVO optimization is independent of the application being delivered and is just as effective with opaque data such as encrypted SSL content, enabling security to be maintained from end-to-end.

Circadence MVO includes Link ResilienceTM. This unique technology was originally developed for the US Military and enables applications to be completely insulated from momentary network outages. Link Resilience automatically mitigates disruptions lasting from milliseconds to hours.

Applications resume traffic exactly where they left off as soon as the connection is restored. MVO with Link ResilienceTM creates the most robust networked application environment.

See additional information at http://www.circadence.com/

Circadence technologies including MVO and MVS can help small, medium, and large enterprises address these challanges and make the most of Cloud Computing.

Circadence MVO offers the most flexible SaaS deployment options in the industry supporting fast, efficient deployment into public and private clouds. Circadence MVO dynamically adjusts performance based on real-time conditions to increase data throughput and reduce lost and resent packets. MVO optimization is independent of the application being delivered and is just as effective with opaque data such as encrypted SSL content, enabling security to be maintained from end-to-end.

Circadence MVO includes Link ResilienceTM. This unique technology was originally developed for the US Military and enables applications to be completely insulated from momentary network outages. Link Resilience automatically mitigates disruptions lasting from milliseconds to hours.

Applications resume traffic exactly where they left off as soon as the connection is restored. MVO with Link ResilienceTM creates the most robust networked application environment.

See additional information at http://www.circadence.com/

Great Seminar on Configuring Routers, Switches, and Firewalls

Josh Stephens, the Head Geek at Solar Winds, is giving a webinar on Configuration Management. These are great opportunities to learn the basics and I highly recommend them, but space is usually limited. Sign up here: http://app.en25.com/e/er.aspx?s=826&lid=1458&elq=a443a3f25b4d41639069728f71d07090

Saturday, February 5, 2011

Circadence MVO Improvements for Highly Degraded Transactional Application

The below examples show the effect of adding MVO to a degraded application connection experiencing the problems discussed in our previous postings on Latency, Fragmentation, and Application Behavior:

The image below again shows the effects on a transactional application in an environment of high latency and fragmentation. The application achieved ~63kb/s with a 44.2% error rate, the actual “goodput” on this connection was less than 30kb/s.

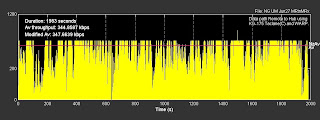

The image below shows the same application and network connection as Figure 6 above, but the Circadence MVO platform has been enabled. Performance increased from ~63kb/s with a 44.2% error rate and “goodput” less than 30kb/s, to ~348kb/s with zero error rate and “goodput” greater than 348kb/s (with peaks above 800kb/s, note the change in scale versus Figure 6). This represents a greater than 10x improvement in actual application throughput and significantly increased overall performance with no application outages.

Application Inefficiency and the Total System Effect

The applications utilizing a given transport mechanism are responsible for effectively and efficiently dealing with the environment that they are implemented in. Often however, applications are introduced into environments for which they are not designed and are not well equipped to handle. Transactional systems like database applications, Point of Sale, and real-time response are noted for poor performance in degraded networks. Additionally any applications which rely on “chatty” behavior (highly acknowledge packets), or multiple transactions can also be adversely impacted by degraded network conditions. Additionally, the above types of applications are also impacted in a highly significant manner by effects of latency and fragmentation in the network transport medium. In the image below (utilizing the same test application, now configured to conduct database transactions, and transport medium as in the Latgency and Fragmentation examples) shows the effects on a transactional system of latency and fragmentation. Of a rated 5Mb/s capacity the performance dropped from ~557kb/s with zero errors to ~63kb/s with a remarkable 44.2% error rate, the actual “goodput” on this connection was less than 30kb/s. Relatively long interruptions displayed are the result of the application either locking or halting as retransmissions and fragmented packets act as a packet flood, in effect creating a localized and self inflicted denial of service.

Fragmentation

Fragmentation significantly reduces overall system performance through causing applications to retransmit original data if the distent end application is unable to utilize fragmented packets. This can become a compounding effect as the sending application continues to retransmit packets which are now well known to become fragmented in flight, causing more retransmissions of the same packets leading to more fragmentation. The overall effect creates conditions of extreme local congestion, application loss, and poor network utilization. In these environments the ration between “goodput”, the actual usable data successfully delivered, versus throughput, the overall amount of transport medium being used regardless of quality, very quickly degrades. As seen in the image below (utilizing the same application and transport medium as in our Latency example), the introduction of encryption has heavily degraded the performance of the connection. This erosion of performance is caused by packet fragmentation introduced by the encryption process. The encryptors, in this example an AES256 VPN and a high speed bulk encryptor must insert x amount of overhead data on every packet, if the packet is already sized at (MTU-x)+1 bits then it will be fragmented every time. Of a rated 5Mb/s capacity the performance dropped from ~557kb/s with zero errors to ~121kb/s with a 6.3% error rate.

System Latency

Latency contributes to performance degradation through two principle effects: the delay in transmission and acknowledgment creating conditions where applications must pause; and, a perception of loss created when a packet sent is not acknowledged within a given time frame. As seen in the image below, latency can have a profound impact on performance. In this example the network transport medium is a high quality SATCOM connection rated at 5Mb/s with no competing traffic and an open transponder. System latency is the only highly significant performance mitigation factor at play. The application is a test profile conducting transactions of a fixed size. Of a rated 5Mb/s capacity the application was able to achieve ~557kb/s with zero errors.

Performance Degradation Causes

What causes performance problems when accessing applications over a network connection, or when transferring content from one location to another? The chain of technologies utilized communications networks can create significant performance problems for network reliant applications that are more complex than simple propagation delay phenomena. These include the following major performance limiters:

1. System Latency, the total time for a full and complete data transaction over a given set of transport medium, expressed in milliseconds (ms)

a. Propagation Delay= Total time required for the physical act of transporting a data bit over a given medium;

b. Route Queuing= The cumulative delay created by routing and switching equipment in the full and complete data connection path;

c. SATCOM Time Slotting= For Satellite communications networks where access is granted to Satellite transponders according to a revolving round-robin distribution of limited access. For example, 250ms of satellite access every 500ms;

d. Data Modification Delay= The cumulative delay created by applications and devices which interact with the data stream such as encryptors, load balancers, error correctors, etc;

e. End Point equipment saturation= the cumulative delay created by terminal end equipment such as servers and workstations which are near or at processor, memory, or disk capacity.

2. Fragmentation, the bifurcation of a data packet due to packet size limitations

a. Fragmentation from addition of overhead data

i. VPN Encapsulation

ii. Encryption

iii. Packet signing and tagging

b. IP Encapsulation

i. SATCOM

ii. Long Haul wireless

iii. Cellular

iv. Other Digital to Analog to Digital conversions

c. In-flight damage

3. Application inefficiency

a. “Circular” behaviors, such as communications paths which result in ultimately receiving or responding to self generated transmissions

b. Unneeded updating cycles, such as full replication when differential is sufficient

c. Inefficient network interfaces

i. Poor retransmission handling, allowing multiple retransmission of the same content

ii. Ineffective loss handling, creating locks or runs because of low level loss

iii. Fixed rates and responses, unable to deal with dynamic transport conditions independently

Subscribe to:

Posts (Atom)